Introduction

Users have grown accustomed to talking to their devices. Siri, Google Assistant, and Alexa have normalised voice interaction. Now users expect similar experiences in the apps they use—ordering food by voice, checking account balances through conversation, getting support without typing.

Building conversational AI is more accessible than ever, but there’s still nuance in getting it right. This guide covers how to implement voice interfaces and dialogue management that actually work.

Understanding the Conversational AI Stack

A conversational interface has several components:

┌─────────────────────────────────────────────────────────────┐

│ User Input │

│ (Voice or Text) │

└────────────────────────────┬────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ Speech-to-Text (if voice) │

│ (Whisper, Google STT, Apple Speech) │

└────────────────────────────┬────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ Natural Language Understanding │

│ (Intent Recognition, Entity Extraction) │

└────────────────────────────┬────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ Dialogue Manager │

│ (Context, State, Business Logic) │

└────────────────────────────┬────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ Response Generation │

│ (Templates, LLM, Dynamic Content) │

└────────────────────────────┬────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ Text-to-Speech (if voice) │

│ (System TTS, Cloud TTS, Voice Cloning) │

└─────────────────────────────────────────────────────────────┘Let’s implement each component.

Natural Lan

guage Understanding

guage Understanding

NLU converts user text into structured data: what they want (intent) and the details (entities).

Using Rasa NLU

Rasa is an open-source option you can self-host:

# data/nlu.yml

version: "3.1"

nlu:

- intent: check_balance

examples: |

- what's my balance

- how much do I have

- show me my account balance

- what's in my account

- check my balance

- intent: transfer_money

examples: |

- transfer [50](amount) dollars to [John](recipient)

- send [100](amount) to [Sarah](recipient)

- pay [John Smith](recipient) [25](amount)

- move [200](amount) to my savings

- intent: find_transactions

examples: |

- show my recent transactions

- what did I spend at [Woolworths](merchant) last week

- show purchases from [yesterday](date)

- transactions over [100](amount) dollars# Backend: NLU service

from rasa.nlu.model import Interpreter

import json

class NLUService:

def __init__(self, model_path: str):

self.interpreter = Interpreter.load(model_path)

async def parse(self, text: str) -> dict:

result = self.interpreter.parse(text)

return {

"intent": result["intent"]["name"],

"confidence": result["intent"]["confidence"],

"entities": [

{

"entity": e["entity"],

"value": e["value"],

"start": e["start"],

"end": e["end"]

}

for e in result["entities"]

]

}

# Usage

nlu = NLUService("./models/nlu")

result = await nlu.parse("transfer 50 dollars to John")

# {

# "intent": "transfer_money",

# "confidence": 0.95,

# "entities": [

# {"entity": "amount", "value": "50", "start": 9, "end": 11},

# {"entity": "recipient", "value": "John", "start": 23, "end": 27}

# ]

# }Using LLMs for Intent Recognition

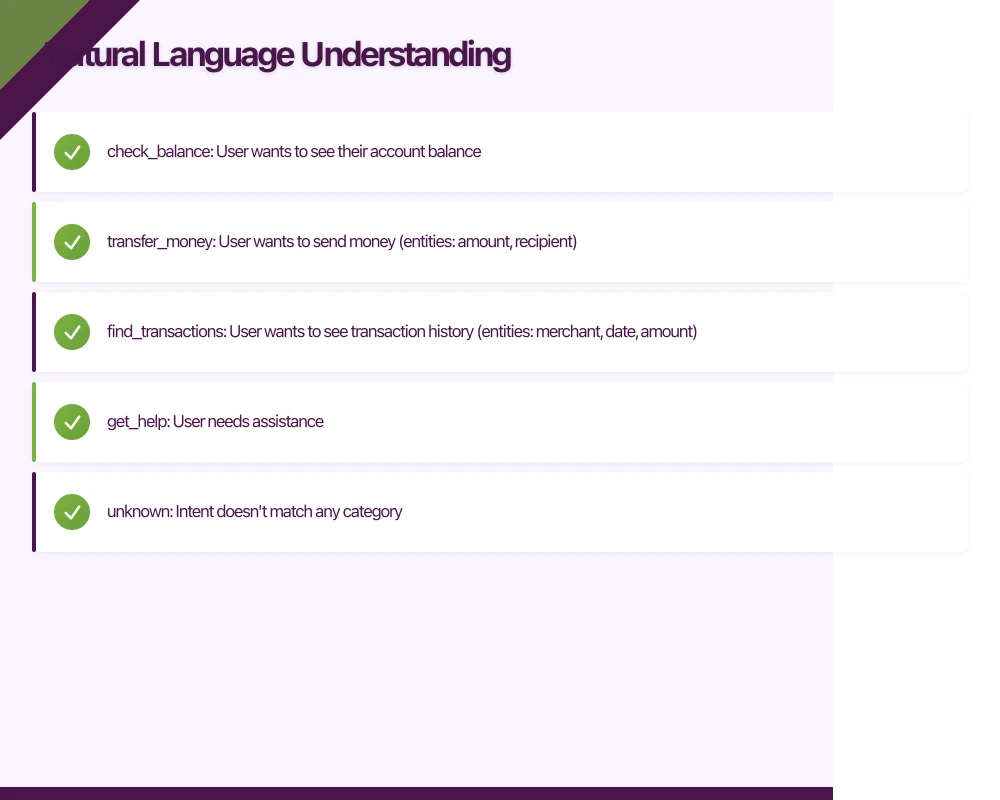

Modern LLMs can handle intent recognition without training:

import OpenAI from 'openai';

const openai = new OpenAI();

interface ParsedIntent {

intent: string;

entities: Record<string, string>;

confidence: number;

}

async function parseWithLLM(userMessage: string): Promise<ParsedIntent> {

const systemPrompt = `You are an intent classifier for a banking app.

Available intents:

- check_balance: User wants to see their account balance

- transfer_money: User wants to send money (entities: amount, recipient)

- find_transactions: User wants to see transaction history (entities: merchant, date, amount)

- get_help: User needs assistance

- unknown: Intent doesn't match any category

Respond with JSON only:

{

"intent": "intent_name",

"entities": {"entity_name": "value"},

"confidence": 0.0-1.0

}`;

const response = await openai.chat.completions.create({

model: 'gpt-4o-mini',

messages: [

{ role: 'system', content: systemPrompt },

{ role: 'user', content: userMessage },

],

response_format: { type: 'json_object' },

temperature: 0,

});

return JSON.parse(response.choices[0].message.content!);

}Building the

Dialogue Manager

Dialogue Manager

The dialogue manager maintains conversation context and decides what happens next.

State Machine Approach

For simple flows, a state machine works well:

interface ConversationState {

currentStep: string;

context: Record<string, any>;

history: Array<{ role: 'user' | 'assistant'; content: string }>;

}

interface DialogueFlow {

steps: Record<string, DialogueStep>;

initialStep: string;

}

interface DialogueStep {

prompt?: string;

requiredEntities?: string[];

validate?: (value: any) => boolean;

onComplete: string | ((context: Record<string, any>) => Promise<string>);

}

const transferMoneyFlow: DialogueFlow = {

initialStep: 'get_recipient',

steps: {

get_recipient: {

prompt: "Who would you like to send money to?",

requiredEntities: ['recipient'],

onComplete: 'get_amount',

},

get_amount: {

prompt: "How much would you like to transfer?",

requiredEntities: ['amount'],

validate: (amount) => parseFloat(amount) > 0,

onComplete: 'confirm',

},

confirm: {

prompt: (ctx) => `Transfer $${ctx.amount} to ${ctx.recipient}. Is that correct?`,

requiredEntities: ['confirmation'],

onComplete: async (ctx) => {

if (ctx.confirmation === 'yes') {

await executeTransfer(ctx.recipient, ctx.amount);

return 'success';

}

return 'cancelled';

},

},

success: {

prompt: (ctx) => `Done! $${ctx.amount} has been sent to ${ctx.recipient}.`,

onComplete: 'end',

},

cancelled: {

prompt: "Transfer cancelled. Is there anything else I can help with?",

onComplete: 'end',

},

},

};

class DialogueManager {

private state: ConversationState;

private flows: Record<string, DialogueFlow>;

constructor() {

this.state = {

currentStep: 'idle',

context: {},

history: [],

};

this.flows = {};

}

registerFlow(intent: string, flow: DialogueFlow) {

this.flows[intent] = flow;

}

async processInput(

text: string,

parsedIntent: ParsedIntent

): Promise<string> {

// Add to history

this.state.history.push({ role: 'user', content: text });

// If idle, start new flow based on intent

if (this.state.currentStep === 'idle') {

const flow = this.flows[parsedIntent.intent];

if (flow) {

this.state.currentStep = flow.initialStep;

// Merge any entities from initial message

Object.assign(this.state.context, parsedIntent.entities);

} else {

return "I'm not sure how to help with that.";

}

} else {

// In active flow, extract entities

Object.assign(this.state.context, parsedIntent.entities);

}

return this.advanceFlow();

}

private async advanceFlow(): Promise<string> {

const flow = this.getCurrentFlow();

if (!flow) return "Something went wrong. Let's start over.";

const step = flow.steps[this.state.currentStep];

if (!step) return "Something went wrong.";

// Check if we have all required entities

const missingEntities = (step.requiredEntities || []).filter(

(e) => !this.state.context[e]

);

if (missingEntities.length > 0) {

// Need more info

const prompt = typeof step.prompt === 'function'

? await step.prompt(this.state.context)

: step.prompt;

return prompt || `I need the ${missingEntities[0]}`;

}

// Validate if needed

if (step.validate && !step.validate(this.state.context)) {

return "That doesn't seem right. Could you try again?";

}

// Move to next step

const nextStep = typeof step.onComplete === 'function'

? await step.onComplete(this.state.context)

: step.onComplete;

this.state.currentStep = nextStep;

if (nextStep === 'end') {

this.reset();

return "Is there anything else I can help you with?";

}

// Get next prompt

return this.advanceFlow();

}

private getCurrentFlow(): DialogueFlow | null {

for (const flow of Object.values(this.flows)) {

if (flow.steps[this.state.currentStep]) {

return flow;

}

}

return null;

}

private reset() {

this.state = {

currentStep: 'idle',

context: {},

history: [],

};

}

}LLM-Powered Dialogue

For more natural conversations, use an LLM as the dialogue manager:

interface ConversationContext {

userId: string;

history: Array<{ role: 'user' | 'assistant'; content: string }>;

userData: {

accountBalance?: number;

recentTransactions?: Transaction[];

contacts?: Contact[];

};

}

class LLMDialogueManager {

private context: ConversationContext;

constructor(userId: string) {

this.context = {

userId,

history: [],

userData: {},

};

}

async processMessage(userMessage: string): Promise<string> {

// Add user message to history

this.context.history.push({ role: 'user', content: userMessage });

// Fetch relevant user data

await this.updateUserData(userMessage);

// Build system prompt with context

const systemPrompt = this.buildSystemPrompt();

const response = await openai.chat.completions.create({

model: 'gpt-4o',

messages: [

{ role: 'system', content: systemPrompt },

...this.context.history.slice(-10), // Last 10 messages for context

{ role: 'user', content: userMessage },

],

tools: this.getAvailableTools(),

temperature: 0.7,

});

const assistantMessage = response.choices[0].message;

// Handle tool calls

if (assistantMessage.tool_calls) {

const toolResults = await this.executeTools(assistantMessage.tool_calls);

return this.generateFinalResponse(toolResults);

}

const reply = assistantMessage.content || "I'm not sure how to help with that.";

this.context.history.push({ role: 'assistant', content: reply });

return reply;

}

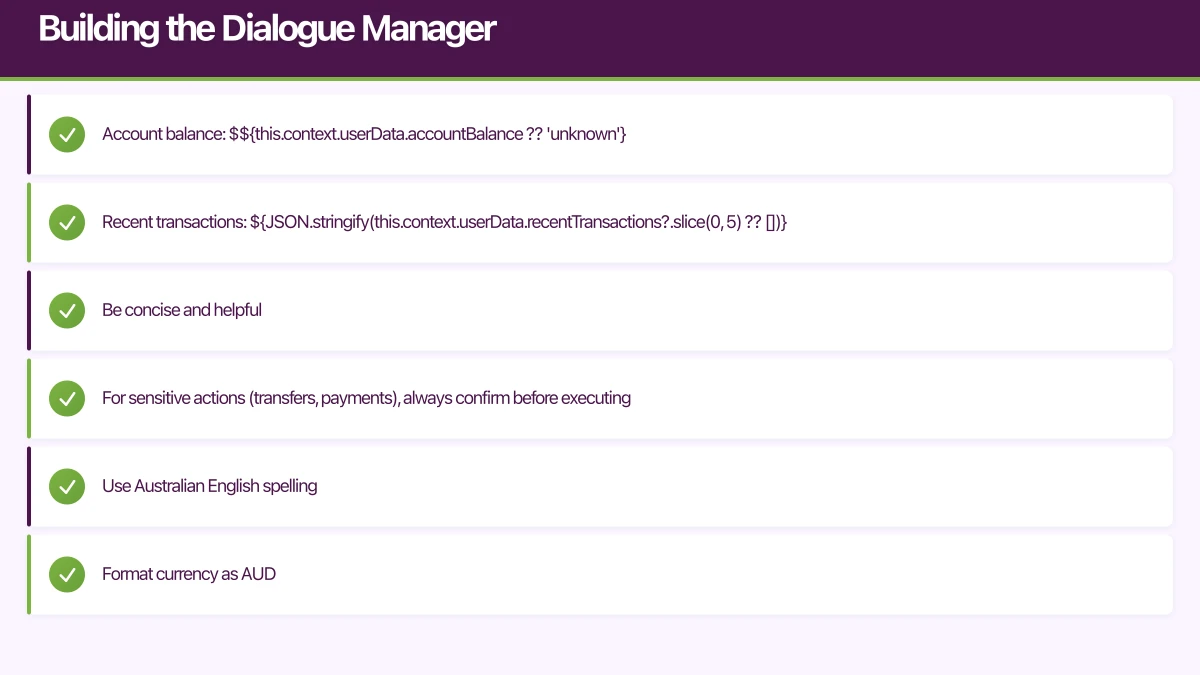

private buildSystemPrompt(): string {

return `You are a helpful banking assistant for an Australian mobile app.

Current user data:

- Account balance: $${this.context.userData.accountBalance ?? 'unknown'}

- Recent transactions: ${JSON.stringify(this.context.userData.recentTransactions?.slice(0, 5) ?? [])}

Guidelines:

- Be concise and helpful

- For sensitive actions (transfers, payments), always confirm before executing

- Use Australian English spelling

- Format currency as AUD

- If you need information to complete a task, ask for it naturally

- Never make up account information`;

}

private getAvailableTools() {

return [

{

type: 'function' as const,

function: {

name: 'get_account_balance',

description: 'Get the current account balance',

parameters: { type: 'object', properties: {} },

},

},

{

type: 'function' as const,

function: {

name: 'transfer_money',

description: 'Transfer money to another account',

parameters: {

type: 'object',

properties: {

recipient: { type: 'string', description: 'Name or account of recipient' },

amount: { type: 'number', description: 'Amount in AUD' },

},

required: ['recipient', 'amount'],

},

},

},

{

type: 'function' as const,

function: {

name: 'get_transactions',

description: 'Get recent transactions',

parameters: {

type: 'object',

properties: {

days: { type: 'number', description: 'Number of days to look back' },

merchant: { type: 'string', description: 'Filter by merchant name' },

},

},

},

},

];

}

private async executeTools(toolCalls: any[]): Promise<Record<string, any>> {

const results: Record<string, any> = {};

for (const call of toolCalls) {

const args = JSON.parse(call.function.arguments);

switch (call.function.name) {

case 'get_account_balance':

results[call.id] = await this.getBalance();

break;

case 'transfer_money':

results[call.id] = await this.initiateTransfer(args.recipient, args.amount);

break;

case 'get_transactions':

results[call.id] = await this.getTransactions(args.days, args.merchant);

break;

}

}

return results;

}

// ... implement actual banking operations

}Mobile Integration

iOS Voice Interface

import Speech

import AVFoundation

class VoiceAssistant: ObservableObject {

@Published var isListening = false

@Published var transcript = ""

@Published var response = ""

@Published var isSpeaking = false

private let speechRecognizer = SFSpeechRecognizer(locale: Locale(identifier: "en-AU"))

private var recognitionTask: SFSpeechRecognitionTask?

private let audioEngine = AVAudioEngine()

private let synthesizer = AVSpeechSynthesizer()

func startConversation() async {

guard await requestPermissions() else { return }

await MainActor.run {

isListening = true

transcript = ""

}

do {

try await startListening()

} catch {

await MainActor.run {

isListening = false

}

}

}

private func startListening() async throws {

let request = SFSpeechAudioBufferRecognitionRequest()

request.shouldReportPartialResults = true

let inputNode = audioEngine.inputNode

let recordingFormat = inputNode.outputFormat(forBus: 0)

inputNode.installTap(onBus: 0, bufferSize: 1024, format: recordingFormat) { buffer, _ in

request.append(buffer)

}

audioEngine.prepare()

try audioEngine.start()

recognitionTask = speechRecognizer?.recognitionTask(with: request) { [weak self] result, error in

if let result = result {

Task { @MainActor in

self?.transcript = result.bestTranscription.formattedString

}

if result.isFinal {

self?.stopListening()

Task {

await self?.processTranscript(result.bestTranscription.formattedString)

}

}

}

}

}

private func stopListening() {

audioEngine.stop()

audioEngine.inputNode.removeTap(onBus: 0)

recognitionTask?.cancel()

Task { @MainActor in

isListening = false

}

}

private func processTranscript(_ text: String) async {

// Send to backend dialogue manager

do {

let response = try await sendToDialogueManager(text)

await MainActor.run {

self.response = response

}

// Speak the response

await speak(response)

} catch {

await MainActor.run {

self.response = "Sorry, I couldn't process that. Please try again."

}

}

}

private func sendToDialogueManager(_ text: String) async throws -> String {

var request = URLRequest(url: URL(string: "\(Config.apiURL)/assistant/chat")!)

request.httpMethod = "POST"

request.setValue("application/json", forHTTPHeaderField: "Content-Type")

request.httpBody = try JSONEncoder().encode(["message": text])

let (data, _) = try await URLSession.shared.data(for: request)

let result = try JSONDecoder().decode(AssistantResponse.self, from: data)

return result.message

}

private func speak(_ text: String) async {

await MainActor.run {

isSpeaking = true

}

let utterance = AVSpeechUtterance(string: text)

utterance.voice = AVSpeechSynthesisVoice(language: "en-AU")

utterance.rate = AVSpeechUtteranceDefaultSpeechRate

await withCheckedContinuation { continuation in

let delegate = SpeechDelegate {

continuation.resume()

}

synthesizer.delegate = delegate

synthesizer.speak(utterance)

}

await MainActor.run {

isSpeaking = false

}

}

}Android Voice Interface

class VoiceAssistant(private val context: Context) : ViewModel() {

private val _isListening = MutableStateFlow(false)

val isListening: StateFlow<Boolean> = _isListening

private val _transcript = MutableStateFlow("")

val transcript: StateFlow<String> = _transcript

private val _response = MutableStateFlow("")

val response: StateFlow<String> = _response

private var speechRecognizer: SpeechRecognizer? = null

private var textToSpeech: TextToSpeech? = null

init {

textToSpeech = TextToSpeech(context) { status ->

if (status == TextToSpeech.SUCCESS) {

textToSpeech?.language = Locale("en", "AU")

}

}

}

fun startConversation() {

if (!SpeechRecognizer.isRecognitionAvailable(context)) {

_response.value = "Speech recognition not available"

return

}

speechRecognizer = SpeechRecognizer.createSpeechRecognizer(context)

speechRecognizer?.setRecognitionListener(createListener())

val intent = Intent(RecognizerIntent.ACTION_RECOGNIZE_SPEECH).apply {

putExtra(RecognizerIntent.EXTRA_LANGUAGE_MODEL, RecognizerIntent.LANGUAGE_MODEL_FREE_FORM)

putExtra(RecognizerIntent.EXTRA_LANGUAGE, "en-AU")

putExtra(RecognizerIntent.EXTRA_PARTIAL_RESULTS, true)

}

speechRecognizer?.startListening(intent)

_isListening.value = true

}

private fun createListener() = object : RecognitionListener {

override fun onResults(results: Bundle?) {

_isListening.value = false

val matches = results?.getStringArrayList(SpeechRecognizer.RESULTS_RECOGNITION)

val text = matches?.firstOrNull() ?: return

_transcript.value = text

viewModelScope.launch {

processTranscript(text)

}

}

override fun onPartialResults(partialResults: Bundle?) {

val matches = partialResults?.getStringArrayList(SpeechRecognizer.RESULTS_RECOGNITION)

matches?.firstOrNull()?.let { _transcript.value = it }

}

// ... other required overrides

override fun onReadyForSpeech(params: Bundle?) {}

override fun onBeginningOfSpeech() {}

override fun onRmsChanged(rmsdB: Float) {}

override fun onBufferReceived(buffer: ByteArray?) {}

override fun onEndOfSpeech() { _isListening.value = false }

override fun onError(error: Int) { _isListening.value = false }

override fun onEvent(eventType: Int, params: Bundle?) {}

}

private suspend fun processTranscript(text: String) {

try {

val response = sendToDialogueManager(text)

_response.value = response

speak(response)

} catch (e: Exception) {

_response.value = "Sorry, something went wrong."

}

}

private suspend fun sendToDialogueManager(text: String): String {

val client = HttpClient(CIO) {

install(ContentNegotiation) { json() }

}

val response: AssistantResponse = client.post("${Config.API_URL}/assistant/chat") {

contentType(ContentType.Application.Json)

setBody(mapOf("message" to text))

}.body()

return response.message

}

private fun speak(text: String) {

textToSpeech?.speak(text, TextToSpeech.QUEUE_FLUSH, null, "response")

}

override fun onCleared() {

speechRecognizer?.destroy()

textToSpeech?.shutdown()

}

}Handling Errors Gracefully

Conversational AI fails often. Handle it well:

async function processWithFallback(userMessage: string): Promise<string> {

try {

// Try primary NLU/dialogue

return await dialogueManager.processMessage(userMessage);

} catch (error) {

// Log for debugging

console.error('Dialogue error:', error);

// Provide helpful fallback

return getFallbackResponse(userMessage);

}

}

function getFallbackResponse(userMessage: string): string {

// Check for common patterns even without NLU

const lowerMessage = userMessage.toLowerCase();

if (lowerMessage.includes('balance')) {

return "I'm having trouble right now. You can check your balance in the Accounts tab.";

}

if (lowerMessage.includes('transfer') || lowerMessage.includes('send')) {

return "I can't process that right now. To transfer money, tap the Transfer button in the app.";

}

if (lowerMessage.includes('help')) {

return "I'm having trouble understanding. You can reach our support team at 1300 XXX XXX or through the Help section in the app.";

}

return "I didn't quite catch that. Could you try saying it differently, or use the app menu for help?";

}Conclusion

Conversational AI in mobile apps requires balancing sophistication with reliability. The key components are:

- NLU for understanding user intent and extracting entities

- Dialogue management for maintaining context and guiding conversations

- Graceful fallbacks when things don’t work

Start simple: a state machine for defined flows handles most use cases. Add LLM-powered dialogue for more natural interactions once you have the basics working. Always provide clear fallbacks when the AI fails—and it will fail.

The best conversational interfaces feel helpful without trying to be too smart. Match your implementation complexity to your actual user needs. Users appreciate a voice interface that handles three things well over one that attempts everything poorly.