Introduction

Most app ideas fail. Not because the technology does not work, but because the market does not want what you built. The Minimum Viable Product (MVP) approach exists to fail faster and cheaper—validating assumptions before committing full resources.

An MVP is not a half-baked product or a demo. It is the smallest version of your app that delivers genuine value to users while providing data to guide future development.

This guide covers practical strategies for building mobile app MVPs that actually validate your business hypotheses.

Why MVPs Fail

Before discussing how to build MVPs, understand why they fail:

Building Too Much

The most common mistake is including too many features. “Minimum” is harder than “viable.” Founders struggle to cut features they are emotionally attached to. The result is an MVP that takes six months instead of six weeks.

Measuring the Wrong Things

Downloads and registrations feel good but reveal little. An MVP should answer specific questions: Will users pay? Will they return? Will they refer others? Vanity metrics obscure signal.

Not Actually Viable

An MVP must deliver real value. If users complete their task successfully, that is viable. If they bounce because core functionality is broken or confusing, you learn nothing except that broken things do not work.

Building for Investors, Not Users

MVPs designed to impress investors often fail to serve users. Polished pitch decks and slick prototypes may raise money but provide no market validation. Users care whether your app solves their problem.

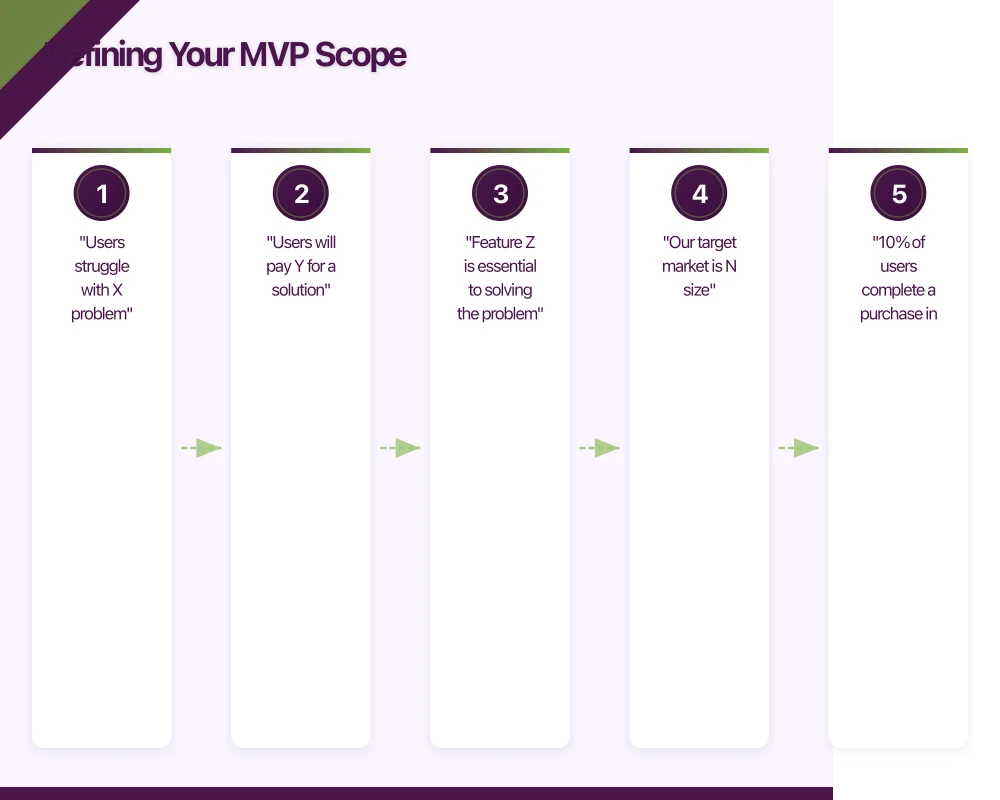

Defining Your MVP Scope

Start with Hy

potheses

potheses

List your assumptions explicitly:

- “Users struggle with X problem”

- “Users will pay Y for a solution”

- “Feature Z is essential to solving the problem”

- “Our target market is N size”

Each assumption is a risk. Your MVP should test the riskiest assumptions first.

The One-Feature MVP

What is the single most important thing your app does? If you had to describe your app in one sentence, what would it be?

That one thing is your MVP. Everything else is noise until you validate the core.

Instagram launched as a photo-sharing app with filters. Not stories, not reels, not shopping—filters and sharing. The core was validated before expansion.

Uber launched in San Francisco as a black car service. Not UberX, not Uber Eats, not freight—premium car service in one city.

Feature Prioritisation Framework

For each potential feature, score:

- User value: How much does this help users accomplish their goal?

- Learning value: What will this teach us about our market?

- Implementation cost: How long will this take to build?

Calculate: (User Value + Learning Value) / Implementation Cost

Build the highest-scoring features first. Be ruthless about cutting low-scoring items.

Define Success Criteria

Before building, define what success looks like:

- “10% of users complete a purchase in their first session”

- “30% of users return within 7 days”

- “Users spend average of 5 minutes per session”

- “Net Promoter Score above 30”

Without predefined criteria, you will rationalise whatever results you get.

Technical Decisions for MVPs

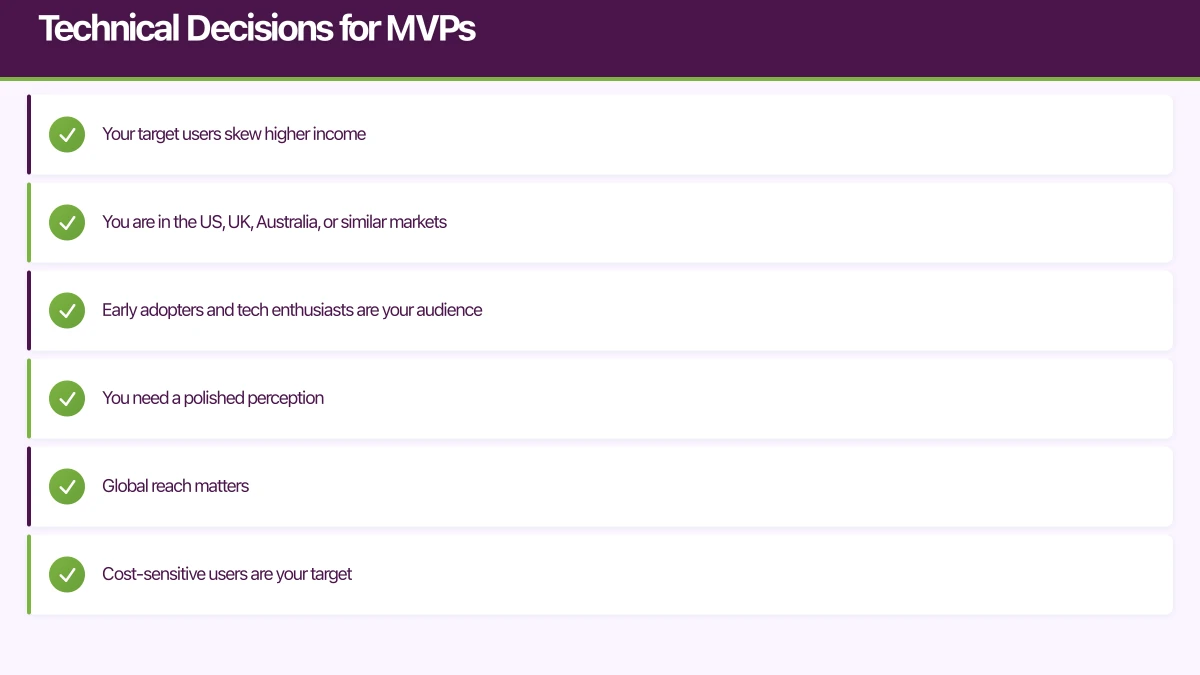

Pla

tform Choice

tform Choice

For most MVPs, start with one platform:

Choose iOS if:

- Your target users skew higher income

- You are in the US, UK, Australia, or similar markets

- Early adopters and tech enthusiasts are your audience

- You need a polished perception

Choose Android if:

- Global reach matters

- Cost-sensitive users are your target

- You need the largest possible test audience

- Your target market is Android-dominant

Choose cross-platform if:

- You need both platforms for business reasons

- Your team has React Native or Flutter expertise

- Time to market is critical

Cross-platform is not inherently faster. Building well for two platforms still requires testing, debugging, and optimising for both. One polished platform often beats two mediocre ones.

Backend Decisions

MVPs benefit from managed backend services that minimise operational overhead:

Firebase (Google)

- Authentication, database, storage, hosting, analytics

- Generous free tier

- Fast to implement

- Scales automatically

AWS Amplify

- Similar capabilities to Firebase

- Better for teams already using AWS

- More configuration complexity

Supabase

- Open-source Firebase alternative

- PostgreSQL-based (SQL vs NoSQL)

- Growing ecosystem

For most MVPs, Firebase provides the fastest path to launch. You can always migrate to custom infrastructure after validation.

Build vs Buy

Every feature has a build-or-buy decision:

| Feature | Build | Buy/Use |

|---|---|---|

| Authentication | Time-consuming, security risks | Firebase Auth, Auth0, Cognito |

| Payments | PCI compliance nightmare | Stripe, Square, RevenueCat |

| Analytics | Distracts from core | Firebase Analytics, Mixpanel, Amplitude |

| Push notifications | Platform complexity | OneSignal, Firebase Cloud Messaging |

| Search | Surprisingly complex | Algolia, Elasticsearch |

| Chat | Real-time infrastructure | SendBird, Stream, Firebase |

Build what differentiates your app. Buy everything else.

Technical Debt Strategy

MVPs accumulate technical debt by design. The goal is shipping, not perfection. However, strategic debt differs from reckless debt.

Acceptable MVP debt:

- Minimal test coverage (test critical paths only)

- Hardcoded configuration

- Limited error handling

- Basic UI polish

- Manual processes you could automate

Dangerous MVP debt:

- Security shortcuts

- Data model decisions that cannot migrate

- Architecture that cannot scale

- Dependencies on deprecated services

Ship fast, but do not build traps you cannot escape.

Building Your MVP

Sprint Zero: Foundation

Before building features, establish foundations:

- Project setup: Create repositories, configure CI/CD, establish coding standards

- Authentication: Users need accounts (usually)

- Navigation structure: Core app architecture

- Analytics integration: Instrument from day one

- Crash reporting: Know when things break

This foundation takes one to two weeks. It is not wasted time—you cannot validate anything if your app crashes constantly.

Core Feature Development

Build your core feature completely before adding secondary features. “Complete” means:

- Happy path works reliably

- Error states are handled gracefully

- Performance is acceptable

- UI communicates state clearly

A complete core feature beats three half-finished features.

Weekly Releases

Release internally every week. External releases might be less frequent, but internal builds keep the team honest about progress.

Each release should be potentially shippable. If you had to launch today, could you?

User Testing During Development

Do not wait until launch to get user feedback. Test with real users throughout development:

- Week 2: Paper prototypes or Figma prototypes

- Week 4: Basic functional prototype

- Week 6: Feature-complete alpha

- Week 8: Beta with broader testing

Each testing round reveals issues you cannot see from inside the team.

Launch Strategy

Soft Launch

Launch quietly before you launch loudly. A soft launch lets you identify issues at small scale:

- Friends and family: People who will forgive bugs

- Waitlist users: Early signups who expect rough edges

- Limited geography: Launch in one city or region first

Soft launches typically last two to four weeks. Fix critical issues, observe usage patterns, and refine onboarding before broader release.

App Store Optimisation Basics

Even for an MVP, basic ASO matters:

- App name: Include primary keyword if possible

- Screenshots: Show the core value proposition

- Description: Lead with benefits, not features

- Keywords: Research what users actually search

You can optimise more later, but first impressions affect downloads permanently.

Launch Marketing

MVPs do not need (and cannot afford) broad marketing. Focus on:

- Existing networks: Your LinkedIn, Twitter, communities you belong to

- Early adopter channels: Product Hunt, Hacker News, relevant subreddits

- Direct outreach: Email people who match your target persona

The goal is not millions of downloads. The goal is hundreds of engaged users who provide feedback.

Measuring MVP Success

Key Metrics

Focus on metrics that indicate product-market fit:

Activation: Do users complete the core action?

- Percentage completing signup

- Percentage completing first [core action]

- Time to first value

Retention: Do users come back?

- Day 1, Day 7, Day 30 retention

- Weekly active users / Monthly active users

- Session frequency

Engagement: Do users find value?

- Session duration

- Actions per session

- Feature usage distribution

Revenue (if applicable): Will users pay?

- Conversion to paid

- Average revenue per user

- Lifetime value (estimated)

Referral: Do users recommend you?

- Net Promoter Score

- Organic referrals

- Social shares

Interpreting Results

Numbers need context:

| Metric | Poor | Acceptable | Good |

|---|---|---|---|

| Day 1 Retention | less than 20% | 20-40% | above 40% |

| Day 7 Retention | less than 10% | 10-20% | above 20% |

| Day 30 Retention | less than 5% | 5-10% | above 10% |

These benchmarks vary by category. Social apps expect higher retention than utility apps. Consumer apps expect higher than enterprise.

Qualitative Feedback

Numbers tell you what. Users tell you why.

- In-app feedback: Simple rating prompts with optional comments

- User interviews: Five users per week reveals patterns

- Support conversations: Every complaint is a learning opportunity

- Session recordings: Tools like FullStory or LogRocket show actual usage

Combine quantitative and qualitative. Numbers without context mislead. Stories without data mislead differently.

Pivot, Persevere, or Stop

After your MVP launches, you face a decision:

Persevere

If metrics hit your predefined success criteria, continue. Expand features, increase marketing, raise investment.

Signs to persevere:

- Retention exceeds benchmarks

- Users request specific features (not complaining the app does not do everything)

- Organic growth appears

- Paying customers exist

Pivot

If some metrics are promising but others are not, pivot. A pivot changes one dimension while preserving others:

- Customer pivot: Same product, different target market

- Problem pivot: Same customers, different problem

- Solution pivot: Same problem, different solution

- Channel pivot: Same product, different distribution

Pivots preserve learnings. You are not starting over—you are applying what you learned to a new direction.

Stop

If nothing works after genuine effort, stop. Not every idea succeeds. Resources spent on a failing idea cannot be spent on a better one.

Signs to stop:

- No retention despite iterations

- Core assumption proven false

- Market too small to support a business

- Competition has insurmountable advantages

Stopping is not failure. Persisting with a clearly failing idea is failure.

MVP Budget and Timeline

Realistic Timelines

For a well-scoped MVP:

| Phase | Duration |

|---|---|

| Definition and design | 2-3 weeks |

| Core development | 4-6 weeks |

| Testing and polish | 2 weeks |

| Soft launch | 2-4 weeks |

| Total | 10-15 weeks |

If your MVP takes six months, it is not an MVP.

Budget Ranges

Costs vary enormously based on complexity and team:

| Approach | Cost Range (AUD) |

|---|---|

| Solo founder (your time) | $0 + opportunity cost |

| Offshore freelancers | $15,000-40,000 |

| Local freelancers | $40,000-80,000 |

| Development agency | $60,000-150,000 |

| In-house team | $100,000+ (salaries) |

The cheapest option is rarely the fastest. The most expensive is not always the best. Match your approach to your constraints.

Common MVP Patterns

Concierge MVP

Replace software with human effort. If your app matches users with service providers, manually make those matches initially. This validates demand without building matching algorithms.

Wizard of Oz MVP

The app looks automated but humans operate behind the scenes. Users experience the envisioned product while you validate before building complex automation.

Landing Page MVP

Before building anything, create a landing page describing your app. Collect email signups. Run ads. Measure interest before writing code.

Single-Feature MVP

Build one feature excellently rather than many features poorly. Validate the core before expanding.

Conclusion

MVP development is not about building less. It is about learning more with less. Every feature you do not build is time you can spend validating, iterating, and improving what matters.

Define your hypotheses. Build the minimum that tests them. Measure ruthlessly. Decide with data.

Your first version will be wrong. That is expected. The goal is being wrong quickly and cheaply, then being less wrong in the next iteration.

Ship something. Learn something. Repeat.